What is a GDS Assessment? It is an assessment run by Government Digital Services to ensure that a service developed within Government meets the Digital Service Standard. It is run at the end of alpha, private beta and public beta. For academic readers, to a digital team, the GDS service assessment is a bit like what a viva may be to a doctoral researcher (but the viva is harder and scarier!)

Service being assessed: Our new childminder registration service that was in private beta.

The Assessment Day

Our GDS Assessment was due to start at 12 noon until 4pm. I travelled over early in the morning from Greater Manchester picking up some biscuits on route. As I joined the team as a user researcher just a month prior to the assessment, the plan was for me to present the research I had conducted so far in private beta and also the future research plan. And for the head of our team to present the remaining research conducted from alpha assessment onwards.

The assessment started with brief round the table introductions of people on our team and the 5 assessors, followed by the service manager providing the context of the service. This was then followed by a 40-minute demo of the new service. Thereafter, it was very structured, and followed the assessment agenda provided beforehand, which had specific areas assessors focused on to ensure that the service met the digital standard. There is useful information Gov UK about what happens at an assessment and some useful advice here and here too.

Top five things I learnt as a user researcher:

- The service assessment criteria are your best friend – The assessors will assess you against the service assessment criteria based on the digital standard, which I believe will be provided beforehand. Therefore, you need to plan what you will present or answer according to that list. The criteria around user needs include who your users are, the methodology used, user needs identified, and what research you have done with those with low or no digital skills and accessibility needs. You are also asked to provide specific examples of where user research has led to changes to design and content. It is important to keep the assessment criteria in mind when planning future research activity too.

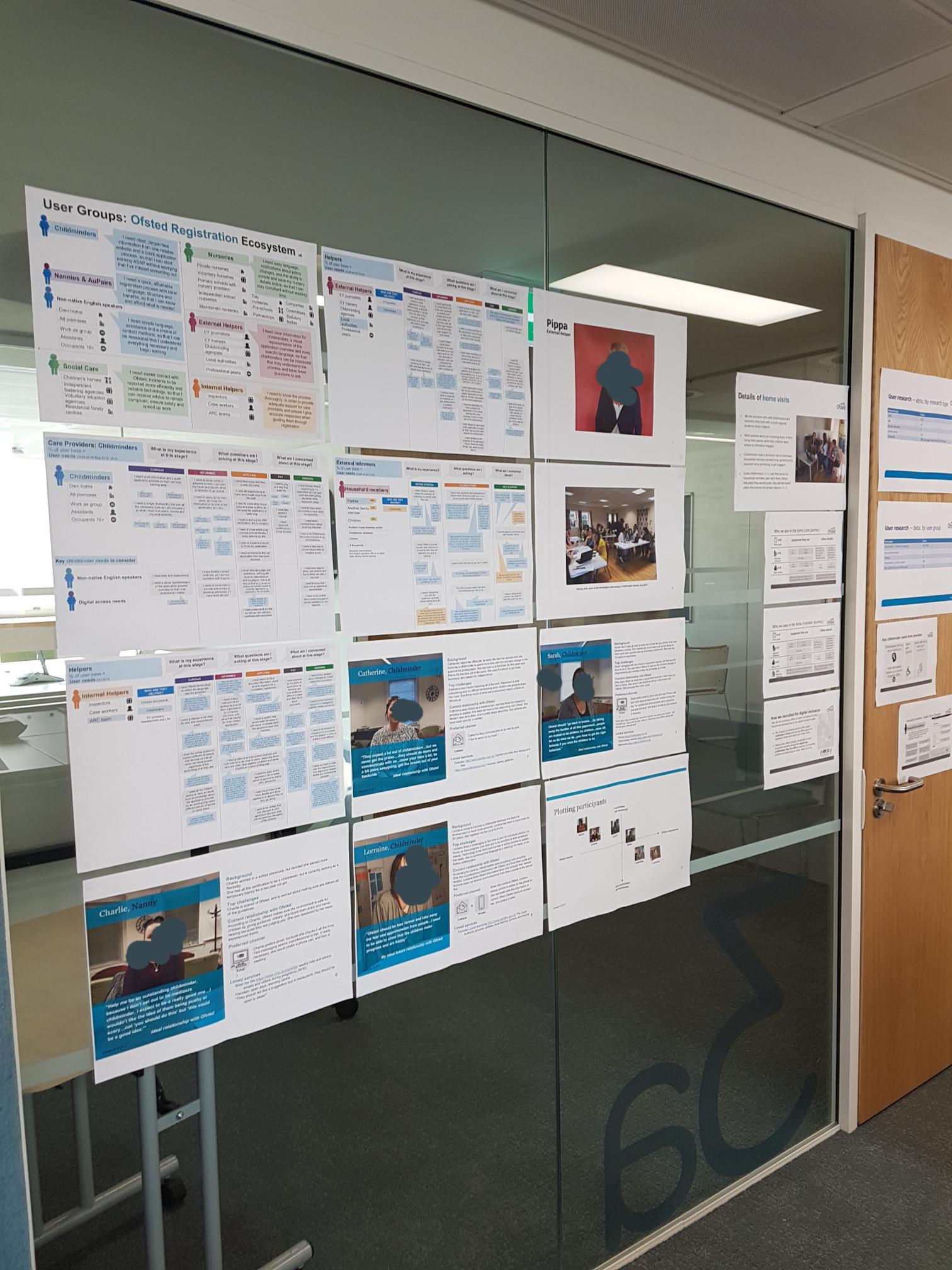

- Summarise the research & provide a few stories – It is tempting to go into lots of detail and try and cover everything you have done, but you will not have time to do this, and nor is it the best use of time. Do prepare materials beforehand and stick them up on the wall if you have a lot of material or choose which slides to present. We decided to do a mixture of both. We placed quite a lot of diagrams on the wall (such as various user need diagrams) that we referred to whilst talking and so that the assessors could look at it in the break or after the assessment if they wanted more detail. But our future research plan was on a single slide, which we chose to show on the digital screen. Also, do have a few specific interesting stories related to your research activity that you can talk about, ideally ones that will make people laugh or smile. The panel will also want to see that everyone in the team has been involved with research, so encourage others in the team to talk about research too.

- The GDS pre-workshop is there to help you – I would be weary to describe the GDS pre-workshop as a mock assessment, as for us it was much more than that. It is an opportunity to not only practice parts of what you have prepared for assessment, but ask for help around areas that you may be struggling with or feel less confident about (and there is no harm in being upfront about it). Our manager made it very clear that we do not need to go in to the workshop fully prepared with final slides – rather use it as an opportunity to get our work peer-reviewed so that we can improve our preparation for assessment. It was also quite informal, and we broke up into smaller groups depending on our specialist areas. Our workshop was 2 weeks before the assessment, which was useful as it gave us time to refine our slides and answers, address suggestions from the mock assessors and areas for improvement. For example, I presented the forward user research plan to the user researcher assessor, who thought it was very thorough and made a suggestion to observe one of the user groups too. He also suggested I trim down the slides, which I did. We also realised that the demo of the service was taking far too much time, and we needed to provide more detailed context about the service prior to the demo, so that the demo could be understood better. We all left the workshop with clear action plans of what we needed to do prior to the assessment.

- Document research details as you are conducting the research – Whether you have an upcoming assessment or not, it is important to document details of your research, including aims, rationale and limitations of each study or phase. The Assessors will want specific details on how many ESL users for example were in your sample or how many were dyslexic. Having come from academia, we document everything, so this comes naturally to me. But we struggled a bit this with as a team, as our previous research although had been documented, the details were not documented where we expected them to be. The team had also conducted multiple types of research with different users in multiple locations, sometimes even running in parallel, so it was not always easy to recall numbers, and people had left the team too.

- Prepare answers to difficult questions where possible – One of the ways you can identify difficult questions is by taking a step back and critiquing your own research, or asking a colleague to do that. The pre-workshop may throw up some difficult questions too, and that is good, as you then have two weeks to dig into your data and prepare the answers. For example, we had not conducted sufficient usability testing with a very small group of users with assisted digital needs. We were upfront about that, provided a rationale for it, and made it very clear exactly where it fitted into our future research plan. There will always be questions that you are not prepared for, but don’t worry, take a deep breath, refer back to your notes/laptop if you need to, or ask if you can come back to that question before the end of the assessment. The assessment is not a memory test.

Finally, on a practical level, it is helpful to request for the assessment to take place in your own department building, that way you can stick up your slides or diagrams days in advance, rather than last minute frantically putting them up and deciding where they go. It also means the team can rehearse their sections as well in the same room as the real assessment, and there is no last-minute panic finding the right venue or room and getting access and technology sorted.

Overall, I really enjoyed the experience, as I’m always looking for opportunities to get peer feedback. Having come from academia, I was expecting much more grilling and challenging questions. As a team, I feel we benefited from the experience, because apart from bonding, it has given us huge confidence to go into public beta. I am also pleased to share that 3 working days later we were emailed with the good news that we had passed the assessment having met the criteria in all areas. We are now looking forward to going into public beta, and putting the forward research plan into action.

Comments