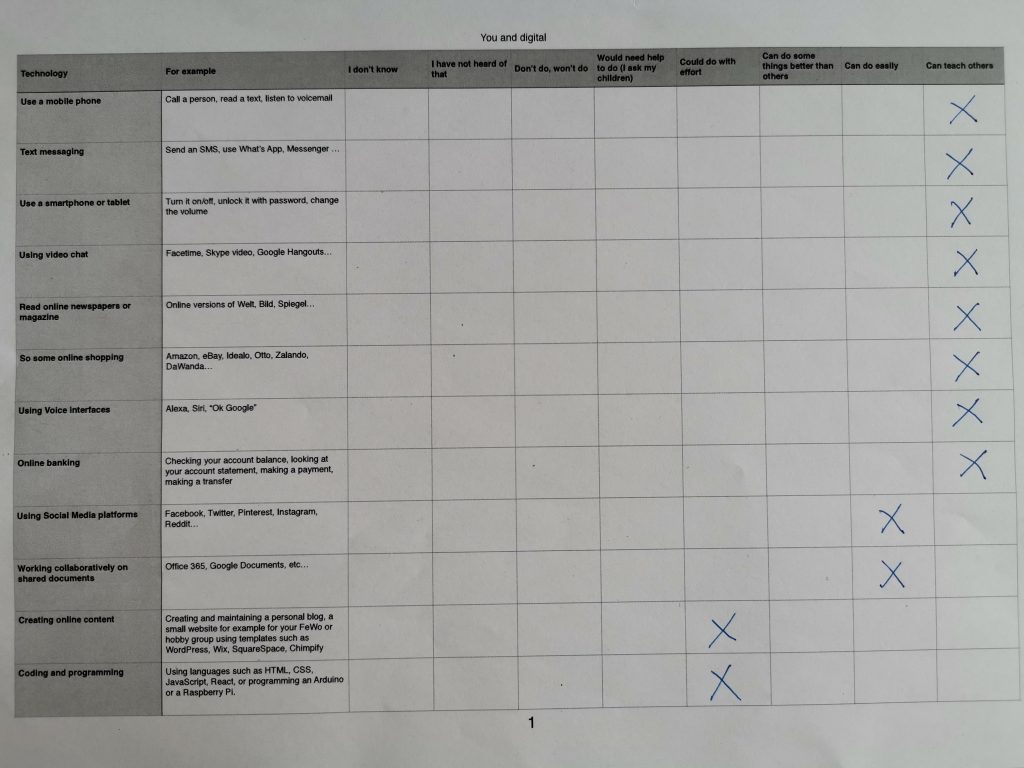

Whilst conducting user research, it is important to be aware of the digital competency of your users to ensure that you include participants in your research who are across the spectrum of GDS’ digital inclusion scale. Doing this will also mean you can identify assisted digital needs. Some in government appear to be doing this qualitatively by asking questions such as which devices do you use, and what do you do with such devices? However, my aim was to look for a measure that could be used across different studies (qualitative and/or quantitative) conducted both in person, in groups and remotely. I started off by looking at the different digital skills measures, including this one developed by LSE Academics. In the end, I decided to review and amend the popular Basic Digital Skills framework (see below) to suit our research needs and participants.

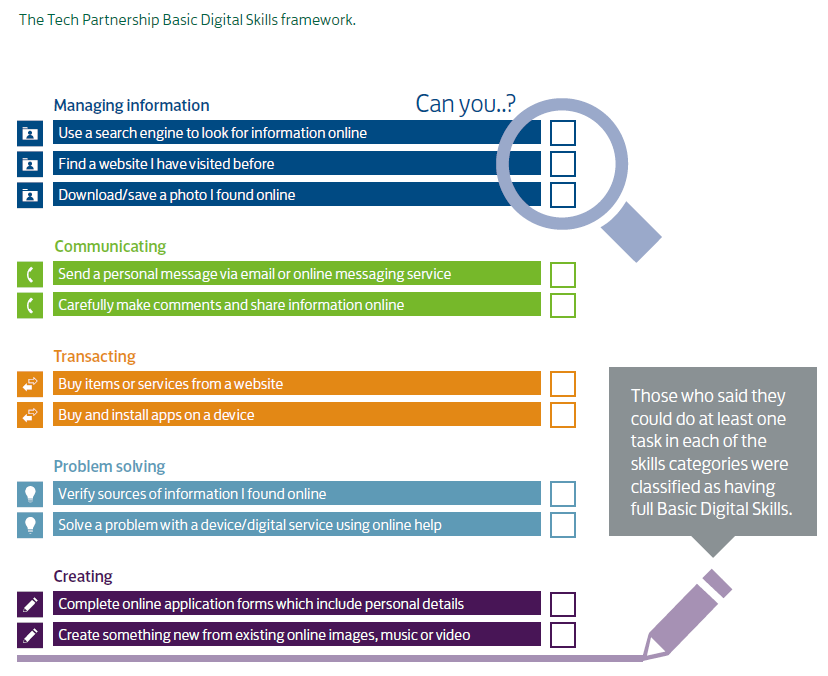

The Basic Digital Skills framework

The Basic Digital Skills framework is informed by the Government’s Essential Digital Skills Framework. Developed by Go ON UK and owned by The Tech Partnership and Lloyds Bank, it has been used each year since 2015 to measure the digital skill levels of around 9,000 adults across the UK. The measure is currently documented as follows (although there are reports of it being updated):

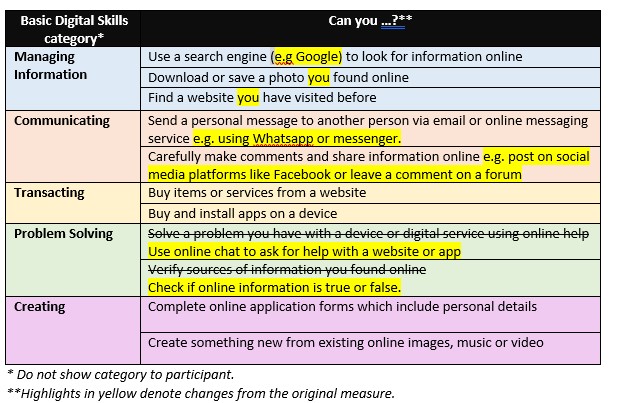

Simplifying the language

This framework or measure has been traditionally used when conducting face-to-face research, where the researcher is present to explain further or give examples to the participant. For the research we are currently conducting, we require a measure that participants can fill in themselves, either in person or online. This is because our team have found that some of the users we are currently working with often conceal the truth from us as we are a regulator (Ofsted) who actually requires them to have basic digital skills. Giving them the option to fill the measure in alone and anonymously may reduce the users’ apprehension, and provide us with a more accurate picture. Therefore, taking inspiration from the simpler wording in the Digital Participation Charter, I amended the wording in the measure as follows (changes are highlighted in yellow):

Response options

In the original Basic Digital Skills framework, respondents were presented with the 11 digital tasks and asked two questions:

- Which tasks could you do if asked? (Yes/No)

- Which tasks have you done in the last three months? (Yes/No)

Those who said they could do at least one task in each of the skills categories were classified as having full Basic Digital Skills. It is not clear how the results from Question 2 would be analysed or how it contributes to the measure.

Ria Jesrani from the Government Digital Service suggested that the scale for Question 1 should be changed to the following:

- I have no idea what this is

- I wouldn’t know how to do this alone

- I might be able to do this, with support

- I feel comfortable doing this alone

- I could teach others how to do this.

I agree that this scale is better suited to assess digital competency as it gives a more accurate portrayal of the confidence and assisted digital needs of the participant. However, for a participant filling in this alone, these may be far too many options, and you may risk losing participants (especially those online) if the survey options appear too complicated.

Analysis of responses

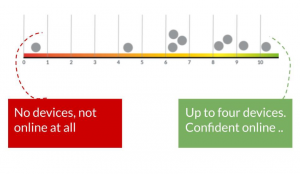

As mentioned above, those who said they could do at least one task in each of the skills categories were classified as having full Basic Digital Skills. However, the question is: how do we add up the results to create a measure of competency beyond basic digital skills measure? If they can do all the tasks for example, what does that mean? And how does this map out on to the GDS’ digital inclusion scale?

What we intend to use and test

We plan to use the amended measure mentioned above in our next phase of research. But I still feel we should be using a more concise measure on online surveys, and I will continue looking for one (or explore the possibility of developing one). User research colleagues from NHS BS very kindly shared with me a 5 question digital engagement survey that they are using, which was developed by Dr Grant Blank for the government Digital Engagement Research Working Group (further information here). Some would argue that digital engagement is distinctly different to digital competency. However, where possible, I am going to test the digital engagement survey alongside the one I have amended to see how the results compare, in an attempt to assess its accuracy. I hope to report back the results so that it can benefit other teams too.

Other approaches

Since writing this piece, I have come across other approaches which could potentially be used too, depending on the type of study that you are running and the type of users you have.

- Simon Hurst’s Approach – This approach uses 7 questions that user’s fill in, and the results are then mapped onto the digital inclusion scale. This is an approach that could work well, especially if handed out as a paper survey (but would probably not work too well for phone interviews). I am keen to try it soon.

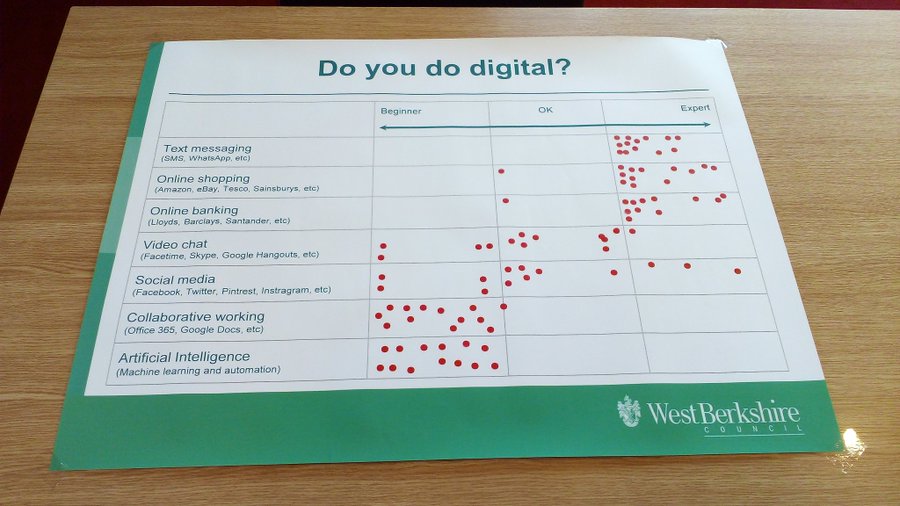

- Do you do digital? – This approach was shared by Phil Rumen on Twitter. Their internal users were asked to map where they felt their digital skills were:

If used, this approach could do with amending by adding categories such as ‘I have not heard of it’ or ‘I refuse to use such technology’ and ‘I don’t know’ (thanks to Caroline Jarret for the latter two). My hesitation with using such an approach is that how people understand the words ‘beginner, OK and expert’ are different, and therefore could affect the validity of people’s responses. Therefore, I would be hesitant to use it in a setting where we can not consistently explain what these terms mean.

3. What do you do on each device? – As mentioned at the beginning of this piece, this approach is where you ask people which devices they have and what they do with such devices. Jane Reid is an advocate for this approach and feels it especially works well for understanding where users will get stuck and what type of assisted digital support they will need. It is also a good conversation starter and less embarrassing for people who are on the lower end of the scale. I like this approach for face-to-face research. The outcome I think still should be mapped against something, or else it can be a guessing game of where users are on scale, and this way you can ensure a consistent approach across the team. Jane however uses this approach more to identify where and which type of support is needed in the service, which would be relevant to many services across government.

4. Amy Everett’s Cards – In her own words, Amy described this card approach on Twitter: “From a lot of work I did in assisted digital it did seem that that user’s context (the service they needed to do, the skills/confidence they had, the access they had etc) all played a part in placing someone on the scale. I made these cards to think about all the aspects involved:

5. Sophie’s Grid – This new grid approach by Sophie is still being tested but is based on twitter conversations and the table I shared above. See below or here for further information:

6. UK Intellectual Property Office’s approach – Thanks Sophie for sharing this:

7. Vita’s from DxW’s approach is documented here:

8. Hackney County Council’s 4 question approach – Richard Smith (Lead User Researcher at Hackney County Council) kindly shared recently (Sep 21) the 4 question approach they use to measure digital competency, which is explained and available here. He described the approach as follows: We’ve used it a few times and a few London authorities within LOTI have also used it within their work. It’s not perfect – we’ll keep iterating it based on what we learn.

In the meanwhile, I am interested to hear what measure you or your team use to assess the digital competency of your users?

Love this post, it’s very helpful in my work. I have one question:

These two choices seem similar to me, can you clarify how you differentiate them?

* I wouldn’t know how to do this alone

* I might be able to do this, with support

Thank you!

Thanks Pam, that is a good question. The first I understand as ‘I can’t do this alone’ and the second as ‘I may be able to do this with a bit help’. However, you are right, I think these options need to be tested with users to get the wording ‘correct and consistent’ before they can be used. I will be sharing a post soon regarding validating survey questions which should help with this.

[…] Measure to assess users’ digital competency […]